Scrapy is a hugely popular framework for web scraping and crawling. Python developers love it due to its scalability and extensibility, as the community writes and maintains a lot of useful plugins.

But, it doesn’t natively support scraping dynamic sites.

Combining it with an automation library such as Playwright or Selenium can help, but doing so adds extra work and infrastructure. That’s why in this guide, we’ll look at how to use our /content API to grab the rendered HTML with our headless browsers, for you to then use with Scrapy.

The limitations of default Scrapy and HTTP requests

The fact that it uses good ol’ HTTP requests keeps things simple, but has limitations such as:

- It can only return an initial render of a page

- It won’t execute any JavaScript code

- Waiting for events or selectors isn’t possible

- You can’t interact with the page such as clicking and scrolling

- Bot detectors will easily spot that your request isn’t human

If you want to scrape a single page application built with something like React.js, Angular.js or Vue.js, you’re gonna need a headless browser.

Hosting headless browsers yourself is a nightmare

You might have tried scrapy-playwright or scrapy-splash as a solution. Scrapy-playwright lets you render a page with Playwright through a simple flag in the meta dictionary, while scrapy-splash lets you control a WebKit browser using Lua scripts.

They’re great, but also require you to host your own headless browsers and build your own infrastructure.

It will be fine if you’re only running one or two browsers locally, but scaling them up to tens or hundreds of browsers in a cloud deployment is a constant headache. Chrome is notorious for memory leaks, breakages from updates and consuming huge amounts of resources.

If you really want to go this route, we have an article about managing Chrome on AWS. For people who’d rather not even touch a browser, you can use our /content API.

Get rendered content without running your own browsers, with our /content API

Browserless offers a /content API that returns the HTML content of a page, with the special difference that it’s content that’s been rendered and evaluated inside a browser.

The API receives a JSON payload with the website URL and, optionally, some configuration.

Which will return the full rendered and evaluated HTML content of the page.

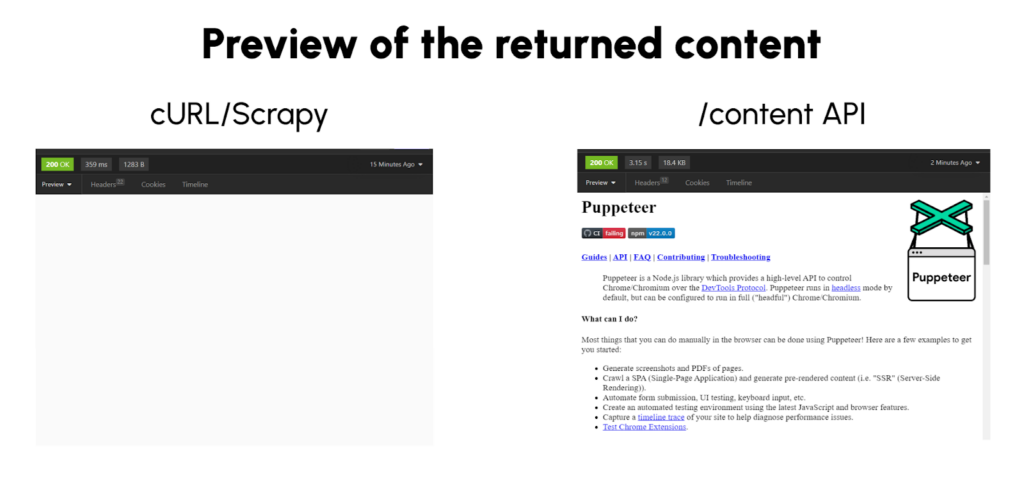

Let’s take a look at the legacy Puppeteer documentation website. It’s entirely dependent on JavaScript execution, so a cURL request will return an empty document. If you use our /content API instead, it can wait for the JavaSCript to be evaluated and return the document.

Using the /content API in Scrapy

Luckily, Scrapy is highly extensible and allows you to run your custom HTTP queries to scrape the data. All you need to do is use the start_requests() method to make a query to the /content API, while keeping your scraping code the same.

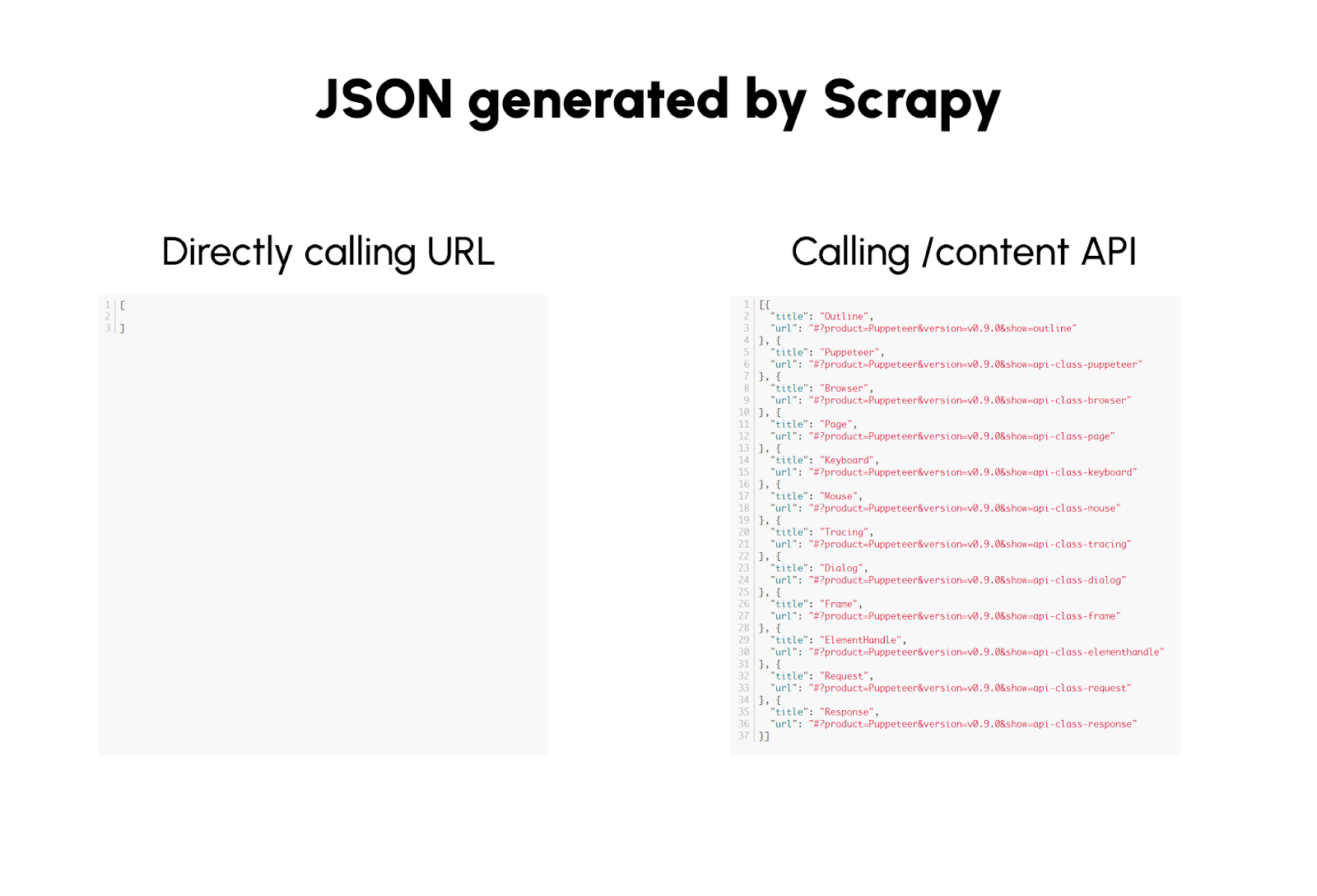

Let’s continue with the Puppeteer Docs page:

And you’ll get the data needed after the page is rendered!

Stealth flags, residential proxies and headful mode

Of course, a big advantage of running requests through a browser is that you can slip past more detectors.

Browserless offers a trio of tools to help with this, which you can enable through our /content API

Inbuilt proxies - our ethically sourced residential proxies can be set with a specified country

Stealth mode - this flag launches the browser in a way that hides many of the signs it’s automated

Headless=false - running Chrome as a “headful” browser makes it less obviously automated, while also automatically handling aspects such as setting realistic user agents

You can run the /content API with these three settings by adding them onto the end of the scrapy.Request URL like so:

Page interactions, waiting for selectors and more

As with all our other APIs, there's a large array of details you can configure to make the most out of it. You can set cookies, execute custom JS code, wait for selectors to appear on the page and more.

If you need to wait for a specific selector to appear in the DOM, to wait for a timeout to happen, or to execute a custom function before scraping, you can set the waitFor property in your JSON payload. This property mirrors Puppeteer's waitFor() method, and can accept one of these parameters:

- A valid CSS selector to be present in the DOM.

- A numerical value (in milliseconds) to wait.

- A custom JavaScript function to execute prior to returning.

For example, with this options, Browserless will wait for any a.pptr-sidebar-item elements before returning the page from the browser:

You can use waitFor to execute a custom function and interact with the page. You’re also able to click elements, set element’s values and anything else that you can do inside the JS virtual machine.

Keep in mind that this function is meant to be executed without changing the page or causing navigation, as navigating during the execution of the code can make things break.

If you need to inject some cookies or HTTP headers, it’s as easy as to include them in the payload, matching puppeteer's setCookie() and setExtraHTTPHeaders() methods.

For full reference of the values accepted by the /content API, please refer to our GitHub repo.

Conclusion

Scrapy is great, but it has limitations such as not being able to handle JS-heavy websites.

Browserless can overcome these obstacles with our production-ready API. With a simple modification to your Scrapy projects, you can render and evaluate dynamic content, handle HTTP authentication, run custom code and more, all without sacrificing Scrapy’s strengths.

Want to run your Scrapy scripts with Browserless? Sign-up for a free trial!