When writing code for web scraping, it's easy to fall into various antipatterns. These aren't full-blown mistakes, but they can make your code inefficient or unreliable.

In this article, we'll look at some of the topics covered by Greg Gorlen, developer & technical writer at Andela in his talk at The Browser Conference. This includes:

- Efficient resource management

- Handling dynamic content

- Reliable locator selection

- Avoid fragile element evaluation

- Efficient data extraction techniques

These are helpful best practices for using Playwright or Puppeteer for web scraping, testing, or browser automation. We'll include code examples for both, so let's get to it.

Resource Management in Web Scraping

Proper resource management helps speed up your scripts and save on bandwidth or proxy costs.

Antipattern: Not Blocking Unnecessary Resources

In web scraping, failing to block unnecessary resources such as images, CSS files, and fonts is a common antipattern. When scraping for text or structured data, these resources do not contribute to your scraping goals but significantly slow down the scraping process by consuming bandwidth and increasing page load times.

The code snippet below demonstrates this antipattern. No resource blocking is applied, leading to the unnecessary download of images, stylesheets, and other assets that slow down the scraping process:

Pattern: Blocking Unnecessary Resources

Blocking unnecessary resources is another key technique in resource management while scraping. By blocking the loading of CSS, images, fonts, and other non-essential elements, you can drastically reduce page load times and minimize bandwidth usage. This approach not only speeds up the scraping process but also reduces the load on target servers, which is particularly important when scraping large volumes of data.

Below is a Playwright code snippet that demonstrates resource management in web scraping by blocking unnecessary resources such as CSS, images, and fonts:

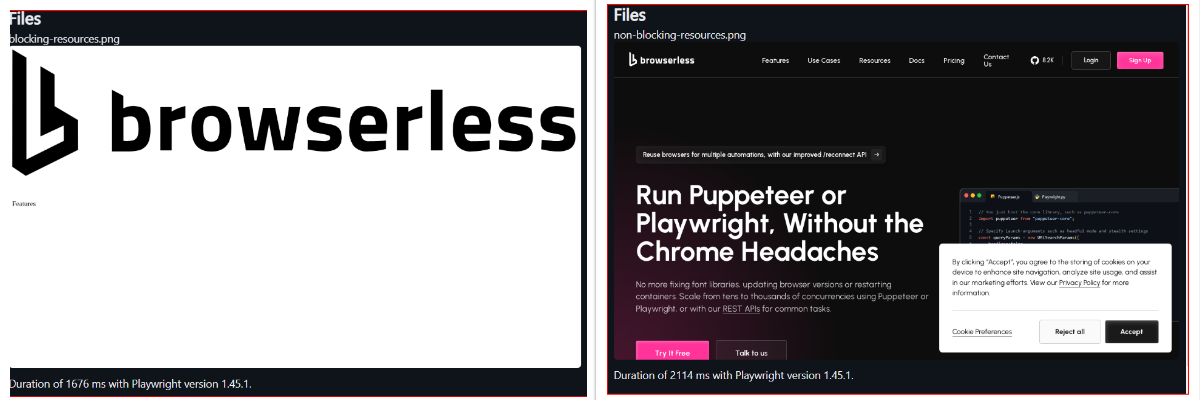

Running the above code reduced the code execution time from 2114ms to 1676ms as shown in this screenshot below.

Proper Browser Management

Antipattern: Not Cleaning Up The Browser

A common antipattern in web scraping is failing to properly clean up the browser instance after the scraping task is complete. At the same time, it's straightforward to launch the browser and execute the scrape; if an error occurs during execution and the browser.close() method is not called, the process may freeze indefinitely, consuming resources and potentially causing memory leaks.

In this code snippet below, the browser is launched and closed, but if an error is thrown before the await browser.close(), the cleanup won't happen, leaving the browser instance running in the background.

Pattern: Proper Browser Launch and Closure

Launching and closing your browser after web scraping can significantly reduce resource utilization and improve overall efficiency. Efficient browser and page handling begin with properly launching and closing the browser instances to prevent resource leaks and maintain system stability.

The code below demonstrates proper browser launch and closure in Playwright using the Browser class:

{{banner}}

Handling Dynamic Content

Dynamic content is any information generated or updated by a web page in real-time, often through JavaScript or other client-side technologies. Dynamic content poses a significant challenge in web scraping because of the dynamically loaded content.

Antipattern: Using Fixed Delays with sleep()

An antipattern in handling dynamic content includes using fixed delays, often implemented with a sleep function, to wait for elements to load. This approach is unreliable and inefficient because it does not account for varying network conditions or the time it might take for content to become available.

Fixed delays can lead to excessive waiting, which wastes time, or insufficient waiting, which results in errors such as flakiness when elements are not yet present. This method is discouraged in Playwright and has been removed in Puppeteer.

Pattern: Waiting for Elements Using Precise Predicate

Handling dynamic content efficiently is important in web scraping to ensure the reliability and accuracy of your web scrapers.

A common and effective pattern is to wait for specific elements to be present or visible before interacting with them. This can be achieved using methods like page.waitForSelector(), page.waitForResponse() or page.waitForFunction() in Playwright. These methods allow your script to wait for a particular element to appear in the DOM, for a custom condition to be met, or until a matching pattern is made or throws an error if it takes too long.

Below is a code snippet demonstrating how to wait appropriately for an element to appear using the page.waitForSelector() method.

Optimizing Scraping Techniques with Locators

Locators are used to find and identify elements on a web page. They are essential in web scraping to target specific pieces of data accurately. Common types of locators include: ID, class, tag, name, XPath:, CSS Selectors:.

Antipattern: Using Brittle XPath Selectors

Relying on XPath selectors is an antipattern in web scraping due to its inherent brittleness. XPath selectors can be a powerful tool for web scraping, however, they can be overly complex and tightly coupled to the specific structure of the HTML document.

This coupling means that even minor changes to the DOM can break the XPath, resulting in your scraper failing and needing frequent maintenance.

Pattern: Use Specific CSS Selectors

Specific CSS selectors allow you to focus on elements on a web page with clarity and accuracy. This reduces the risk of errors when the page structure changes. Unlike more brittle selectors, such as XPath, CSS selectors are generally simpler and more resilient to modifications in HTML.

To prevent the complexities associated with XPath selectors, we strongly advise adopting more robust locator strategies. For example, CSS selectors, which are generally more stable, or using data attributes specifically designed for testing purposes, can significantly reduce the brittleness of your web scraper.

Element Evaluation Best Practices

Antipattern: Using ElementHandles

ElementHandle in web scraping is considered an antipattern because it is less flexible and more prone to errors compared to modern alternatives like page.locator.

ElementHandle acts on a single element and can lead to brittle code when the structure of the webpage changes,. In contrast, page.locator provides a more robust and descriptive way to locate elements, supporting various query strategies like XPath and textContent

Pattern: Using $$eval and locators

Using Playwright's and Puppeteer's $$eval methods and locators is a pattern that promotes both reliability and efficiency of your web scrapers.

The $$eval method allows for evaluating elements on a page using a CSS selector and a custom function. This is useful for operations that require you to interact with multiple elements simultaneously.

Playwright's locator method provides a robust way to interact with elements. Unlike brittle selectors like XPath, which may break if the DOM changes, locators abstract these interactions, making the scripts more resilient to changes in the page structure.

Efficient Data Extraction Techniques

Antipattern: Direct DOM Scraping

Direct DOM scraping is an antipattern that can lead to fragile and unreliable scraping scripts. This approach involves hard-coding locators and directly interacting with the DOM elements using methods like document.querySelector() or document.evaluate(). For example, relying on exact XPath paths can create brittle scrapers that fail when any minor change is made to the page layout.

Pattern: Intercepting Network Requests or Scraping JSON

Intercepting network requests or scraping JSON directly is a more efficient and stable approach to extracting data than scraping the DOM. This method allows you to access raw, unformatted data from API responses.

Wrapping Up

In this article, you have learned key aspects of web scraping patterns and antipatterns, including best practices for reliable data extraction. You discovered the importance of using Playwright's methods such as $$eval and locator for efficient and stable element evaluation, avoiding the pitfalls of direct DOM scraping.

This article emphasized using specific CSS selectors over brittle XPath selectors to enhance script robustness.

You also learned about efficient browser and page management techniques, as well as methods for blocking unnecessary resources to optimize performance.

For high-scale web scraping, check out Browserless. Our pool of hosted browsers is ready to use with Puppeteer and Playwright without managing browser deployments.

Grab a free 7-day trial and test it yourself.

Playwright & Puppeteer Without the Browser Headaches

If you want an easy way to deploy Playwright or Puppeteer at scale, try Browserless.

We host a pool of managed browsers, ready for connecting to with a quick change in endpoint. We take care of everything from version updates to memory leaks, so you can run tens, hundreds or thousands of concurrent scrapes.