Deploying Puppeteer on Azure VirtualMachines is a powerful solution for automating browsers at scale, but it can be tricky to set up and maintain. There’s various issues you’ll run into such m missing dependencies.

In this guide, we'll walk through setting up Puppeteer on an Azure VM, from choosing the right instance type to installing necessary dependencies and configuring your environment for optimal performance.

{{banner}}

Choosing the Right Google Cloud Engine

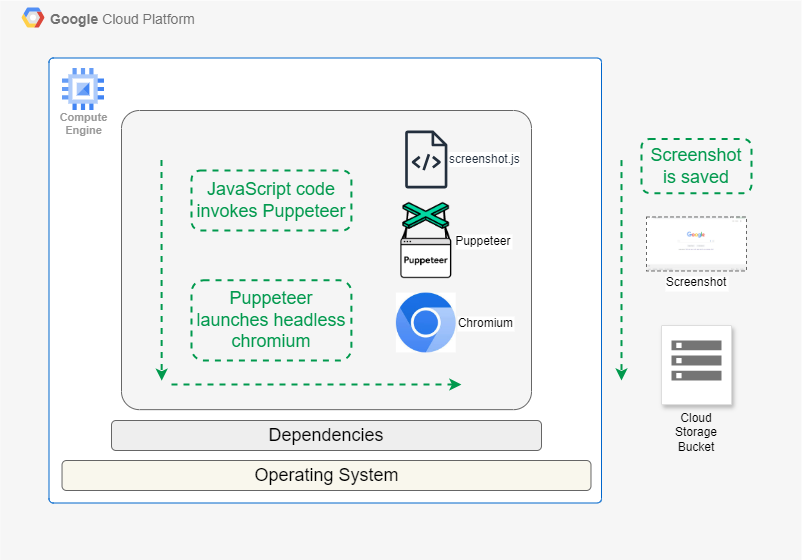

When deploying Puppeteer on Google Cloud, selecting the appropriate GCE size is essential for optimal performance. A n1-standard-1 or e2-medium instance, equipped with 4-8 GB of RAM, typically provides enough resources for Puppeteer to run efficiently. For storage, allocating at least 10 GB is advisable to store Chromium and any temporary files created during execution.

We will use Ubuntu OS since it is supported by Puppeteer and available on Google Compute Engine.

Setting up Compute Engine and Puppeteer

Launch your Google Compute Engine with Ubuntu, ensuring proper storage configurations, and connect via SSH. To install Node.js and Puppeteer, follow the commands provided below.

Additionally, we will install the Google Cloud Storage client library for JavaScript, which is needed to upload screenshots to a Google Cloud Storage bucket from GCE.

Installing dependencies

Dependency management sometimes becomes complex, as package names, versions, and availability may change over time and with different OS versions.

The following dependency list is tested for Ubuntu on GCE and represents the current working set. However, remember that as Ubuntu and Puppeteer evolve, this list may need updating.

Without the correct set of dependencies, Puppeteer fails with errors such as:

cannot open shared object file: No such file or directoryAn error occurred: Error: Failed to launch the browser process

Configuring Google Cloud Storage

The code in the following section stores the screenshot in Google Cloud Storage. You need to set up a cloud storage bucket and provide authentication to GCE to save screenshots. Compute Engine also needs the Google Cloud Storage library, which we've already installed in the previous section.

Set up the bucket in Google Cloud Storage to store screenshots and provide the permissions using the following steps:

- Enable Cloud Storage API - In Google Cloud Console, enable the Cloud Storage API through APIs & Services > Library.

- Adding permissions to GCE - The simplest way to handle authentication is to use the default service account.. Ensure this service account has the "Storage Object Creator" role for your bucket in IAM & Admin > IAM.

Granting the service account (assigned to the GCE) the necessary permissions—like Storage Object Creator or Storage Admin—on the bucket will allow the GCE to access and upload objects to Google Cloud Storage.

Writing the code

The following code takes the website URL as input, captures a screenshot and saves it to the Cloud Storage bucket.

Now you're ready to run a Puppeteer script to capture screenshots. Use the following command to run the code (note the input format with https) -

Managing deployments

Managing the dependencies and maintaining them continuously is a time consuming task.

Dependency installation can be hindered by resource contention issues, such as apt cache locks. These occur when multiple package management processes attempt to access shared resources simultaneously, potentially leading to deadlock-like situations.

Troubleshooting typically involves terminating conflicting processes, cleaning up incomplete package installations, and releasing system-wide locks. Proper resolution requires careful handling to maintain system integrity while resolving conflicts.

That’s before you get into issues such as chasing memory leaks and clearing out zombie processes. Without those steps, Puppeteer can gradually require more and more resources.

Simplify your Puppeteer deployments with Browserless

To take the hassle out of scaling your scraping, screenshotting or other automations, try Browserless.

It takes a quick connection change to use our thousands of concurrent Chrome browsers. Try it today with a free trial.

Want an easier option? Use our managed browsers

If you want to skip the hassle of deploying Chrome with it's many dependencies and memory leaks, then try out Browserless. Our pool of managed browsers are ready to connect to with a change in endpoint, with scaling available from tens to thousands of concurrencies.

You can either host just puppeteer-core without Chrome, or use our REST APIs. There’s residential proxies, stealth options, HTML exports and other commonly needed features.