Reddit is an incredible platform filled with vibrant communities (subreddits) discussing almost every topic. It’s a treasure trove of valuable information, from niche interests to industry-specific insights. For marketers, analysts, or anyone conducting research, scraping Reddit can reveal trends, gauge user sentiment, and even provide competitive insights. That said, scraping Reddit comes with challenges. CAPTCHA protections, rate limiting, and dynamically loaded content often get in the way, making it tricky to extract data consistently.

Page Structure

Reddit is a treasure trove of discussions, opinions, and content, all organized within its subreddits. If you’re looking to scrape data from Reddit, it’s helpful to understand how the pages are structured and the kind of information you can extract. Let’s break it down step by step.

Use Cases for Scraping Reddit

There are so many ways Reddit data can be used to uncover trends, improve products, or just better understand what’s happening in certain communities. Whether you’re a researcher, marketer, or developer, scraping Reddit can open up some exciting possibilities:

- Market Research: Dive into customer feedback and emerging trends to shape your strategies.

- Content Monitoring: Keep track of discussions, viral memes, or popular topics in your niche.

- Competitor Analysis: See how your competitors are being talked about across subreddits.

- Academic Research: Explore opinions and conversations for cultural or social studies.

- Product Development: Use insights from niche subreddits to refine or enhance your product offerings.

Reddit’s rich and diverse communities make it a go-to platform for these insights.

Post Results Pages

The post results pages are what you see when browsing through a subreddit. These pages give you an overview of what’s being discussed and which posts are getting the most attention. Here’s what you can extract:

- Post Titles: A concise summary of what each thread is about.

- Descriptions or Previews: Short snippets that give you an idea of the content.

- Upvotes and Comments Count: Indicators of a post’s popularity and engagement.

These pages are a great starting point for finding the content that matters most to you.

Post Detail Pages

Once you click on a post, you’ll land on its detail page, which contains more information and insights. These pages are rich with data that’s perfect for deeper analysis. Here’s what you’ll find:

- Upvote Counts: How much support the post has received from the community.

- Comment Counts: The total number of comments, including nested replies.

- Username: The person who posted the content.

- Post Content: The full details of the post, including any media or links.

- Comment Threads: In-depth discussions, complete with replies and conversations.

- Media Links: Shared images, videos, or external URLs in the post or its comments.

These detail pages are where you can dig into the context and engagement of a specific post. Understanding Reddit’s structure makes it much easier to plan your scraping efforts and get the data you need. With this valuable information, you’ll be ready for your next project.

How To Scrape Puppeteer Tutorial

Reddit is a goldmine for understanding people's thoughts about products, industries, and specific tools—like CRMs. Let’s say you’re a marketer for a CRM company and want to learn more about what people are discussing: what features they love, the pain points they face, or what your competitors are doing well. By scraping Reddit posts and comments, you can gather this valuable data. Let’s walk through how Puppeteer can help you collect and analyze these insights step by step.

Step 1 - Setting Up Your Environment

You’ll need to set up a few tools to get started with scraping Reddit. Don’t worry—it’s simple and won’t take long!

- Install Node.js: Head to nodejs.org and download Node.js for your system. This is the environment where we’ll write and run our scripts.

- Install Puppeteer: Puppeteer is a library that lets you control a headless browser (or even a full browser). You can install it by running the following in your terminal:

bash

- Brush Up on Basic JavaScript: You don’t need to be a JavaScript pro, but a little familiarity will go a long way when modifying scripts for your use case.

For this example, we’re writing two short scripts:

- Collect Post URLs: We’ll scrape search results for Reddit posts about CRMs.

- Scrape Comments: We’ll dive into the posts we found and extract comments to analyze market sentiment around CRMs.

Step 2 - Collecting the Post URLs from the Search Page

Reddit’s search pages follow a predictable structure, which makes it easier to scrape. Here’s an example URL for searching posts related to “CRM software” in the subreddit marketing:

This URL structure can be adjusted based on your target subreddit or search keywords:

- Subreddit: Replace

marketingwith the subreddit you’re interested in. - Search Keywords: Replace

crm+softwarewith whatever you want to search for.

For this example, let’s scrape URLs from the above search results.

Here’s a Puppeteer script to collect the URLs:

What’s Happening?

- Search URL: We navigate to Reddit’s search page for the keyword

CRM softwarein themarketingsubreddit. - Dynamic Content Loading: Reddit’s search results load dynamically as you scroll, so we simulate scrolling to ensure all posts are visible.

- Scraping Post Titles and URLs: Using the selector

a[data-testid="post-title"]to accurately capture post titles and their links. - Saving to CSV: Extracted posts are saved in

reddit-post-urls.csvwith two columns:- Post URL

- Post Title

Step 3 - Collecting Comments from the Post Pages

Once we have the post URLs, we can scrape the comments to dig deeper into what people are saying. This step is important if you want to analyze user sentiment around CRMs, what features they need, what frustrations they have, and how competitors are perceived.

Here’s a Puppeteer script to collect comments from the posts:

Reading Input CSV:

- Loads the list of post URLs from

reddit-post-urls.csv. - Navigating to Each Post: Visits each post URL to access its comments.

- Scraping Top-Level Comments: Targets elements with the selector

shreddit-comment[depth="0"]to extract only parent-level comments. - Output CSV: Saves the results into

reddit-comments.csvwith:- Post URL

- Author

- Comment

This method gives you a great way to understand your market, and you can easily use it for any industry or niche you’re working on. With Puppeteer, you can automate the whole process, saving you tons of time while still getting valuable insights.

But what happens when things don’t go smoothly? If you’re scraping many URLs or comments, you might start running into errors or even see warnings like the one below when visiting Reddit in your browser.

So, what do you do if Reddit starts blocking you or things aren’t working? Don’t worry; that’s exactly what we’ll cover in the next section. Let’s look at some easy ways to handle these issues and keep your scraping running without a hitch.

Scaling Limitations

Scraping Reddit can provide incredible insights, but it’s not without challenges. As a platform, Reddit has implemented anti-bot measures to protect its content and users. These challenges can make large-scale scraping difficult if you’re not prepared. Let’s dive into the most common obstacles and how they affect your scraping efforts.

CAPTCHA Challenges

One of the first hurdles you might encounter when scraping multiple Reddit pages is CAPTCHA. CAPTCHA challenges are designed to detect and stop automated behavior, and Reddit frequently triggers these when it detects unusual activity. For example, scraping too many pages quickly or exhibiting non-human-like browsing patterns can prompt a CAPTCHA. If handled correctly, this interrupts your scraping workflow and can lead to complete data collection.

Rate Limiting and IP Blocks

Reddit enforces strict rate limits and can block IP addresses that send too many requests within a short time. When scraping at scale, this can become a significant issue. If your scraper repeatedly sends rapid-fire requests, Reddit’s servers will flag the behavior as suspicious, and your IP may be temporarily or permanently banned. Overcoming this requires careful management of request intervals, often using delays or rotating proxies to spread out requests across multiple IP addresses.

Dynamic Content Loading

Reddit pages, especially search and post detail pages, rely heavily on dynamic content loading. This means some elements, such as comments, votes, or even metadata, are only loaded after specific user interactions, like scrolling. For example, scraping long comment threads or deeply nested replies can be tricky because the content isn’t readily available in the initial HTML. Handling this requires tools like Puppeteer to interact with the page as a user would—scrolling or clicking to load more content.

Browser Fingerprinting

Reddit’s bot detection systems also analyze browser behavior to identify scraping tools. This includes checking for mismatched user-agent strings, missing browser extensions, or inconsistencies in how your script interacts with the page. Your scraper can be flagged and blocked if it doesn’t closely mimic real browser behavior. To overcome this, tools like Puppeteer or BrowserQL can simulate human-like browsing patterns, minimizing detection risks.

How to Overcome Reddit Scraping Challenges

Scraping Reddit with traditional tools like Puppeteer often means jumping through hoops to deal with challenges like CAPTCHAs, rate limits, and dynamic content.

You’d have to write custom scripts to mimic user behavior, manage proxy servers to rotate IPs, and even integrate CAPTCHA-solving services to keep things running. It can work, but it requires much extra effort and ongoing maintenance.

This is where BrowserQL makes things so much easier. It handles these challenges for you right out of the box. BrowserQL can mimic human-like browsing behavior, which helps avoid detection by Reddit’s bot systems. If a CAPTCHA does show up, it integrates with CAPTCHA-solving tools, so your scraping doesn’t halt.

Its built-in proxy support automatically rotates IPs to avoid getting blocked or rate-limited. Instead of worrying about the technical hurdles, you can focus on getting the data you need without the hassle.

BrowserQL Setup

Sign Up for a Browserless Account and Obtain the API Key

To start with BrowserQL for Reddit scraping, the first step is creating a browserless account. Once you’ve registered, log in and head to your dashboard. From there, navigate to the API keys section, where you’ll find your unique key.

This key authenticates your queries with BrowserQL, so make sure to copy it and store it securely. The dashboard also provides useful stats on your usage and account activity, which helps you stay on top of things as you scrape.

Set Up the Development Environment

Next, you’ll need to set up your development environment with Node.js. This is where all the magic happens. Install the required tools, such as node-fetch for making API requests and cheerio for parsing HTML.

If you don’t already have Node.js installed, download it from the official site and ensure it works properly. Then, use npm to install the required packages:

These libraries will make it easier for your scripts to interact with the BrowserQL API and efficiently process the scraped data.

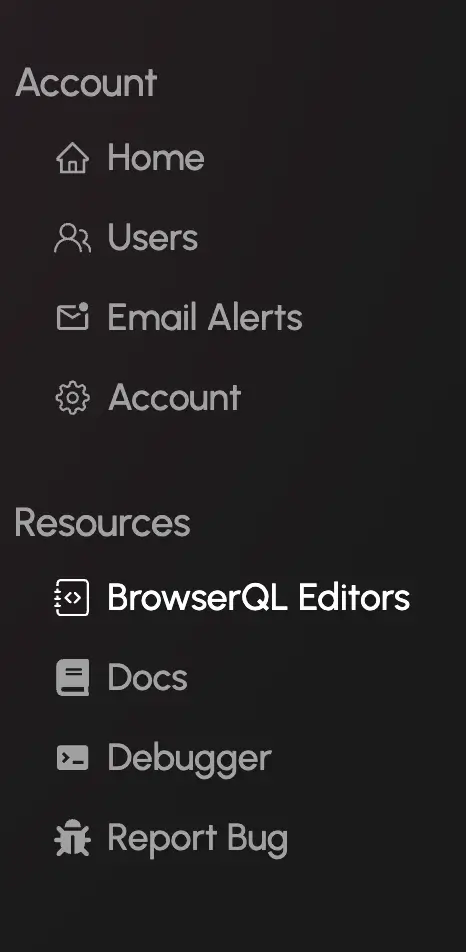

Download the BrowserQL Editor

Download the BrowserQL Editor from your Browserless dashboard to simplify writing and testing your queries. You’ll find it under the left-hand menu under the “BrowserQL Editors” section.

Navigate to the BrowserQL Editors section from your dashboard.

Download the BrowserQL Editor for your operating system.

Click the download button for your operating system, whether it’s Windows, Mac, or Linux. Once the download is complete, follow the installation steps to get the editor up and running.

The BrowserQL Editor makes crafting and testing your queries straightforwardly in a clean and interactive interface. This way, you can debug and fine-tune them before running your scripts in production.

Test BrowserQL with a Basic Query

Before scraping Reddit posts or comments, test your BrowserQL setup with a simple query. Use this mutation to load Reddit’s homepage and check that your environment is configured correctly:

Run this in the BrowserQL Editor or integrate it into your script. If the setup works, you’ll get the page status and loading time as a response. This confirms that your API key and setup can handle more complex queries. Once this test works, you can move on to crafting queries to scrape Reddit posts, comments, and more.

Writing Our BrowserQL Script for Reddit

Part 1: Collecting Post URLs from a Subreddit Search Page

To collect post URLs from Reddit, we’ll first load a subreddit’s search results page, extract the HTML using BrowserQL, and parse it to find the post links. These links will then be saved into a CSV file for further analysis.

Step 1: Import Libraries and Define Constants

First, import the required libraries and define the constants for BrowserQL. These include the API endpoint, your API token, and the paths for input and output files.

What’s Happening?

- BrowserQL URL: This is the endpoint where scraping requests will be sent.

- API Token: Used to authorize your requests.

- Output File: Specifies where the scraped Reddit post URLs will be stored.

- Search URL: The subreddit search page URL to scrape post links for this use case.

Step 2: Build and Execute the BrowserQL Query

Next, create a BrowserQL mutation to load the search page, wait for it to finish loading, and return the HTML content.

What’s Happening?

- GraphQL Query: Requests BrowserQL to load the search page and return the HTML.

- HTML Parsing: Cheerio identifies all links to individual Reddit posts using the specified CSS selector.

- Data Formatting: Converts relative post paths into full URLs.

- Output to CSV: Saves the collected post URLs in a structured CSV file for future processing.

Part 2: Extracting Post Details

Once we have the post URLs, the next step is to visit each post page and scrape details such as the post title, upvotes, comments, and the main content.

Step 1: Define Constants and Read Post URLs

We’ll start by setting up constants for the BrowserQL API and specifying the paths for input and output CSV files. The script will read the post URLs from the CSV file we created earlier.

Step 2: Fetch HTML for Each Post

For each post URL, we’ll use BrowserQL to load the page and retrieve its HTML content. This allows us to access the entire comment section.

Step 3: Extract Comments from HTML

Using Cheerio, we’ll parse the HTML to extract comments and their metadata, such as the author and upvotes.

Step 4: Save Comments to a CSV File

Once the comments are extracted, we’ll save them to a CSV file for easier analysis.

Step 5: Combine the Steps in a Main Process

Finally, we’ll combine all the steps into a single process to extract comments from all the posts in the input CSV.

What’s Happening?

- Fetching HTML: BrowserQL retrieves the raw HTML of the post detail pages, including the comments section.

- Extracting Data: Cheerio parses the comments, capturing the author, comment content, and upvotes.

- Saving Results: Extracted comments are saved to a CSV file for further analysis.

Conclusion

BrowserQL makes scraping Reddit straightforward, efficient, and stress-free. Its ability to handle CAPTCHAs, work with dynamic content, and scale to meet larger projects means you can rely on it for everything from gathering comments to analyzing subreddit trends. Whether you’re a marketer looking to understand customer sentiment or a researcher diving into community discussions, BrowserQL gives you the tools to extract the data you need without running into constant roadblocks.

If you’re ready to take your data scraping to the next level, why not try BrowserQL? Sign up for a free trial today and see how easy it is to unlock the full potential of Reddit and other platforms.

FAQ

Is scraping Reddit legal?

Scraping public data on Reddit is typically acceptable, but it’s important to review Reddit’s terms of service to ensure compliance carefully. Always use the data responsibly and ethically.

What can I scrape from Reddit?

With the right tools, you can extract various types of public data, including post titles, upvotes, comments, user profiles, and subreddit statistics.

Does BrowserQL handle Reddit’s rate limits?

Yes, BrowserQL’s design incorporates human-like interactions and advanced fingerprinting, which helps reduce the chances of detection and avoids rate limiting or throttling.

How do I avoid getting blocked by Reddit while scraping?

BrowserQL makes it simple to stay under the radar by mimicking natural browsing behavior, integrating proxy support, and minimizing browser fingerprints. This significantly lowers the likelihood of being flagged or blocked.