Introduction

Walmart is a go-to platform for millions of shoppers worldwide and a treasure trove of data for businesses and researchers. Whether you’re looking to analyze product details, monitor pricing, track availability, or dive into customer reviews, scraping Walmart can unlock valuable insights for competitive analysis and market research. But scraping Walmart isn’t a walk in the park. Traditional scraping tools often fall short with challenges like CAPTCHA defenses, IP rate limiting, and dynamic content.

Page Structure

Before scraping Walmart, it’s good to familiarize yourself with the site's setup. Walmart organizes its data into clear sections, like product search result pages and detailed product pages, making finding exactly what you’re looking for easier. Let’s take a closer look at what’s available.

Use Cases for Scraping Walmart

Scraping Walmart can help you unlock a ton of insights. Whether you’re tracking prices to stay competitive, analyzing reviews to understand customer sentiment, or gathering stock data to monitor availability, there’s so much value to be found. It’s a great way to build data-driven pricing, inventory, and market analysis strategies.

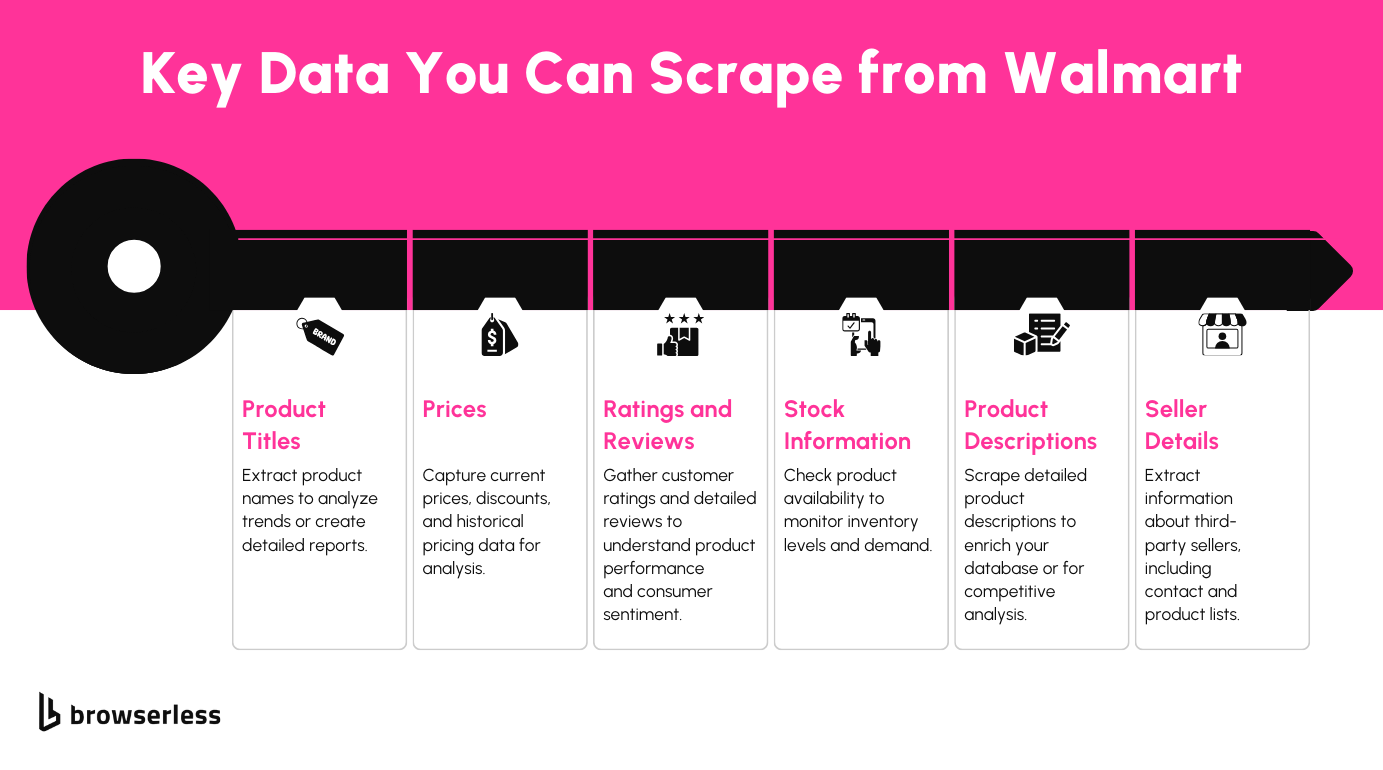

What Data Can You Scrape from Walmart?

Walmart’s site is packed with useful details. You can extract product names, prices, specifications, and even seller information. Customer reviews and stock availability add even more depth, making it a fantastic resource for anyone looking to gather detailed e-commerce data.

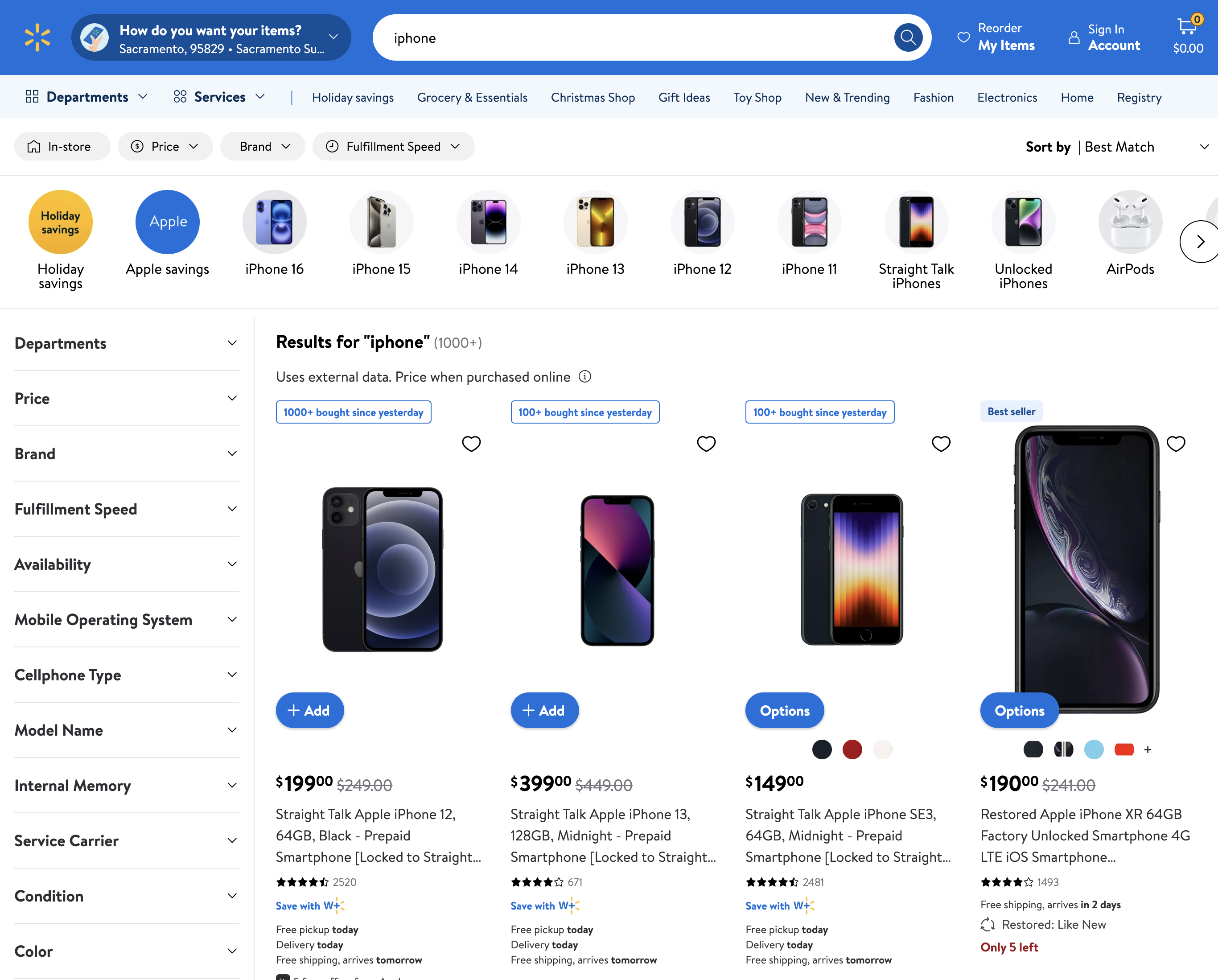

Product Search Results Pages

The search results pages give you a quick overview of products related to your search. These pages contain the basic details you’ll need to start your scrapping journey.

- Key Elements:

- Product titles

- Prices

- Star ratings

- Links to product pages

These pages often include infinite scrolling or pagination, so you’ll want to account for that when pulling data.

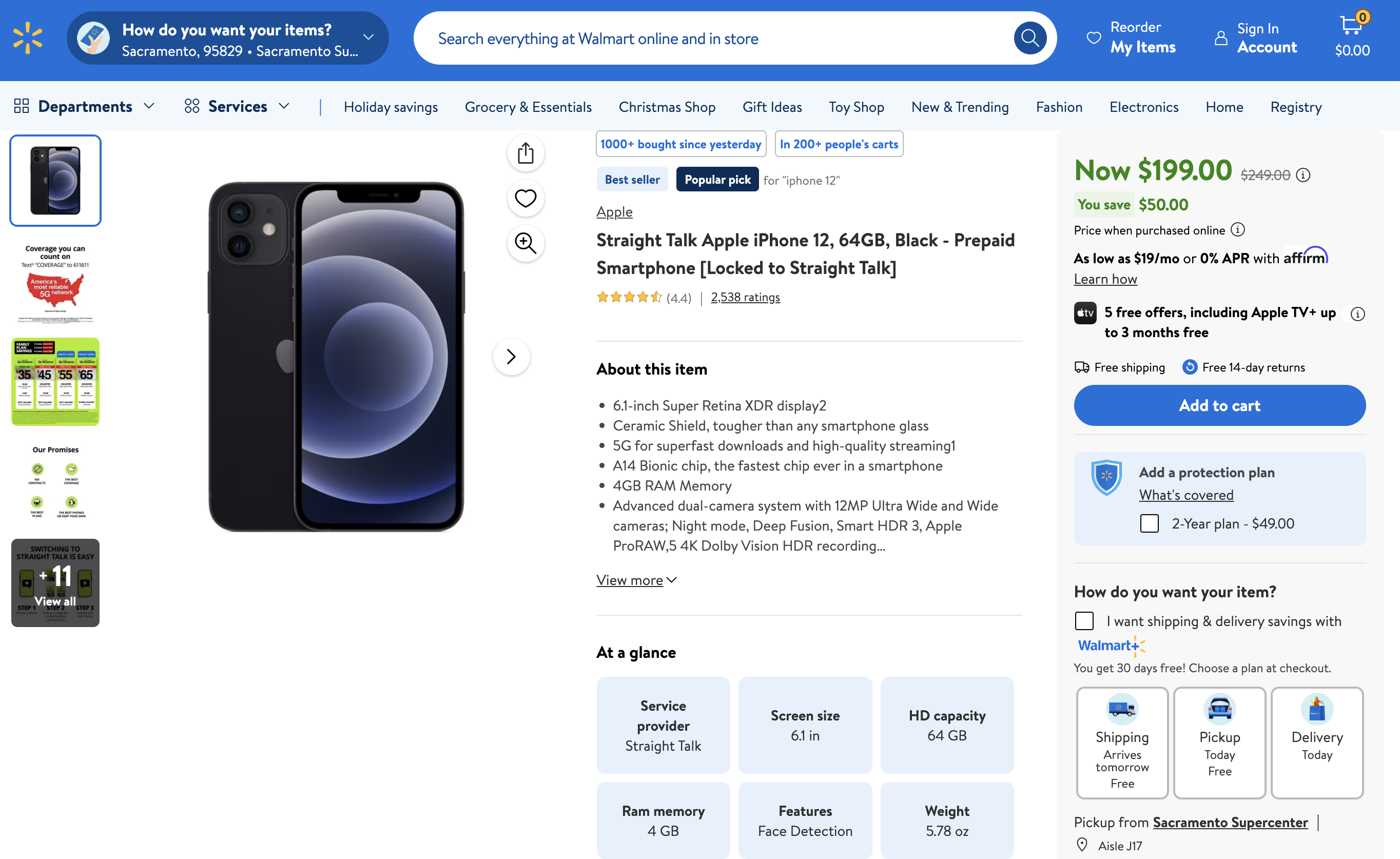

Product Detail Pages

When you click on a product, the detail page opens up a world of information. Here, you’ll find the details that are perfect for deeper analysis.

- Data Points:

- Detailed descriptions

- Specifications (size, materials, etc.)

- Stock status (is it in stock? How many are left?)

- Customer reviews and ratings

- Seller information

These pages have everything you need to understand a product truly. Some sections may load dynamically, so be ready to handle that when designing your script.

How to Scrape Walmart with Puppeteer

Puppeteer is a powerful library for automating web scraping tasks. Here, we’ll walk through how to use Puppeteer to scrape Walmart product details. We’ll write two scripts: one for collecting product URLs from the search results page and another for scraping product details from those URLs.

Step 1: Setting Up Your Environment

Before we begin, make sure you have the following in place:

- Node.js: Install Node.js if you don’t already have it.

- Puppeteer: Install Puppeteer with the command

npm install puppeteer. - Basic JavaScript knowledge: You’ll need to tweak the script as needed.

We’ll be writing two short scripts: one to collect product URLs from a search page and another to extract product details like name, reviews, rating, and price.

Step 2: Collecting Products from the Search Page

Walmart’s search URLs are pretty straightforward: https://www.walmart.com/search?q={product_name}. For example, if you search for "iPhone," the URL becomes https://www.walmart.com/search?q=iphone. In this script, we’ll use a hardcoded URL to pull product links while adding some human-like interactions to avoid triggering CAPTCHAs.

What’s Happening?

SEARCH_URL: This is the Walmart search page URL for our query—in this case, "iPhone."- Starting the Browser: We launch Puppeteer with

headless: falseto make our bot look more like a regular user. - Scrolling: Simulates natural scrolling behavior with pauses and randomness to avoid detection.

- Mouse Movement: Random mouse movements add another layer of human-like interaction.

- Extracting Links: The script identifies all

<a>tags with/ip/in theirhref, which are unique to Walmart product pages, and saves the full URLs. - Saving the Data: All the extracted URLs are written to a CSV file for further processing.

Step 3: Collecting Product Details from URLs

With all product URLs collected, we can now extract specific details like the product name, price, and ratings. This script will read URLs from the CSV file, visit each product page, and scrape the desired details.

What’s Happening?

- Read URLs:

- The script reads product URLs from the

walmart-product-urls.csvfile and sanitizes them usingencodeURI()to handle any formatting issues.

- The script reads product URLs from the

- Visit Pages:

- Puppeteer navigates to each URL and waits for the page to fully load (

networkidle2).

- Puppeteer navigates to each URL and waits for the page to fully load (

- Extract Details:

- The script uses

querySelectorto target specific product details:- Name: Extracted from the

#main-titleelement. - Price: Scraped from the element with

[itemprop="price"]. - Rating: Combined value of star ratings (

[itemprop="ratingValue"]) and total reviews ([itemprop="ratingCount"]).

- Name: Extracted from the

- The script uses

- Retry on Errors:

- If a page fails to load or an error occurs during scraping, the script retries up to three times.

- Save to CSV:

- Scraped data is saved to

walmart-product-details.csvin a structured format with columns for the product name, price, rating, and URL.

- Scraped data is saved to

These scripts give you a straightforward way to scrape Walmart using Puppeteer. They’re also flexible, and you can expand them to handle additional data points or more complex workflows.

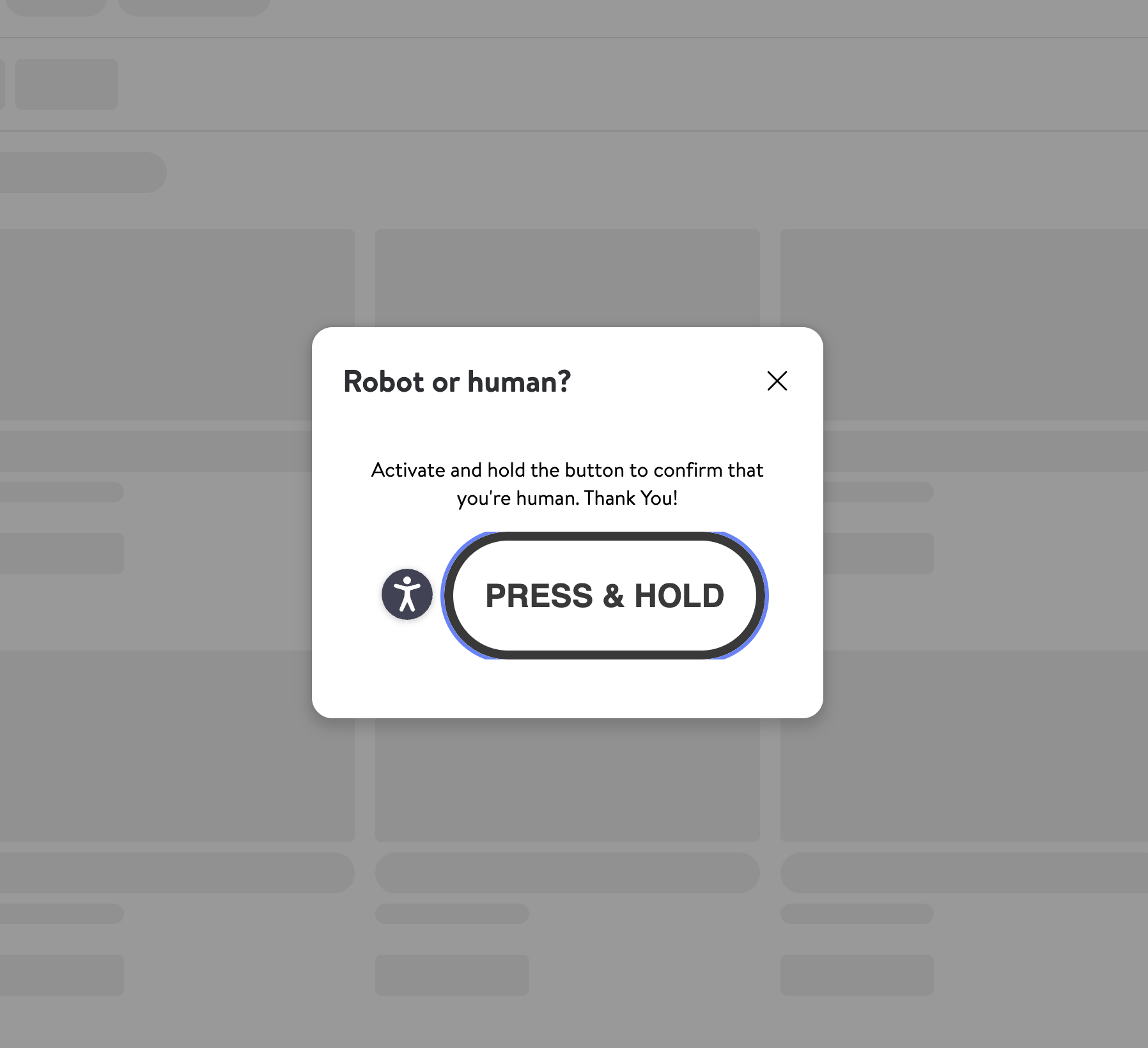

However, while running the script in Step 3, you might come into issues when scraping a large number of product URLs with a capita challenge like this:

So how do you handle this? That's what we will cover in the next section.

Scaling Limitations

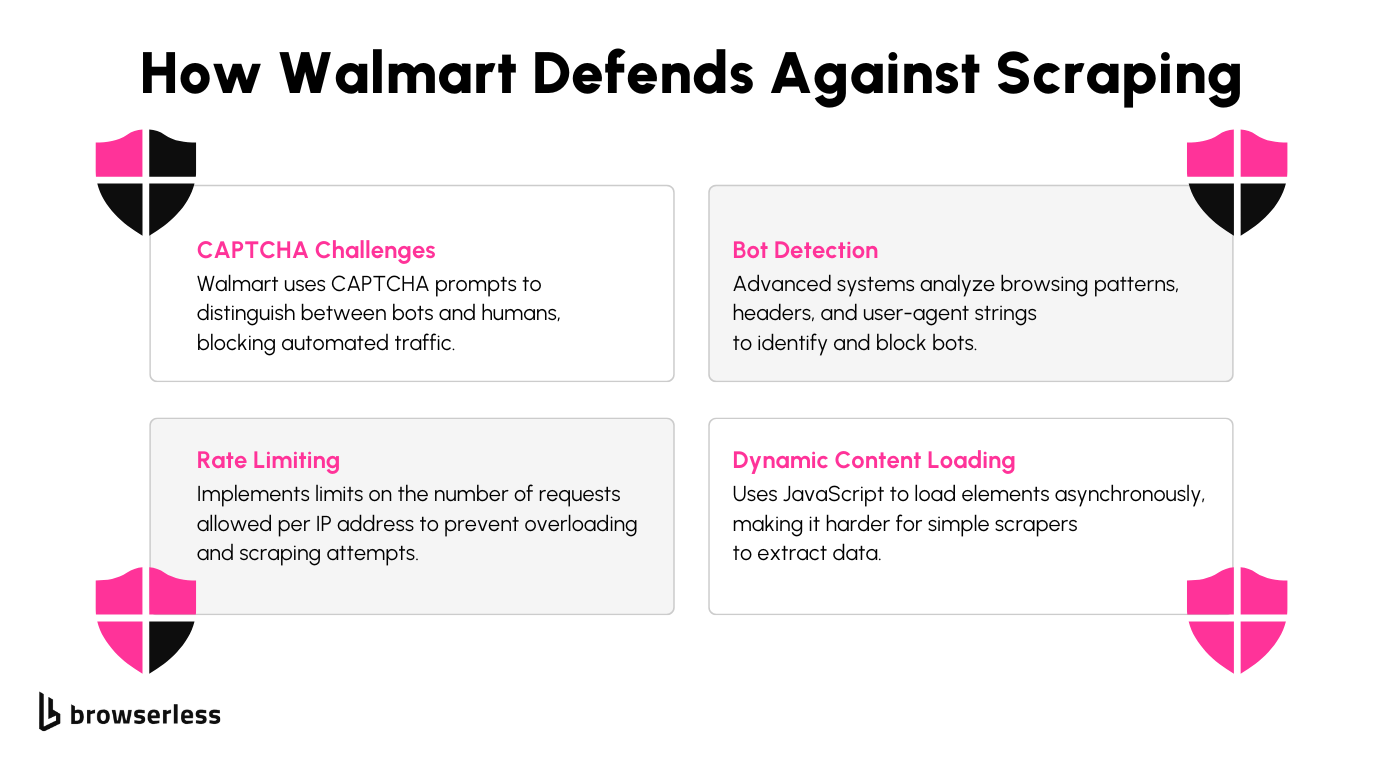

You might have encountered CAPTCHAs when trying to scrape Walmart’s product listings. These challenges are part of Walmart’s measures to prevent automated tools from accessing their site. Let’s look at the hurdles they’ve put in place and how they impact scraping efforts.

CAPTCHA Challenges

CAPTCHAs are designed to distinguish between humans and bots. When requests to Walmart’s servers become too frequent or suspicious, CAPTCHA challenges are triggered. These challenges often block access until the user completes a task, such as identifying specific objects in images. For automated scripts, this presents a significant roadblock.

IP Blocking

If traffic from an IP address seems abnormal, Walmart can block that address entirely. This happens when patterns such as repeated requests in a short time or requests to a large number of pages occur. Such behavior deviates from what a typical user would do, leading to the server denying access from that source.

Detecting Non-Human Behaviors

Behaviors like rapid scrolling, clicking links at precise intervals, or accessing pages faster than a human could indicate the presence of a bot. Walmart’s systems monitor for these patterns and flag any activity that doesn’t align with how real users interact with their site.

Dynamically Loaded Content

Many elements on Walmart’s pages, like reviews and product availability, are loaded dynamically using JavaScript. These elements may not be immediately available in the HTML source. Traditional scrapers struggle with this because they can’t process the JavaScript required to render the full page content.

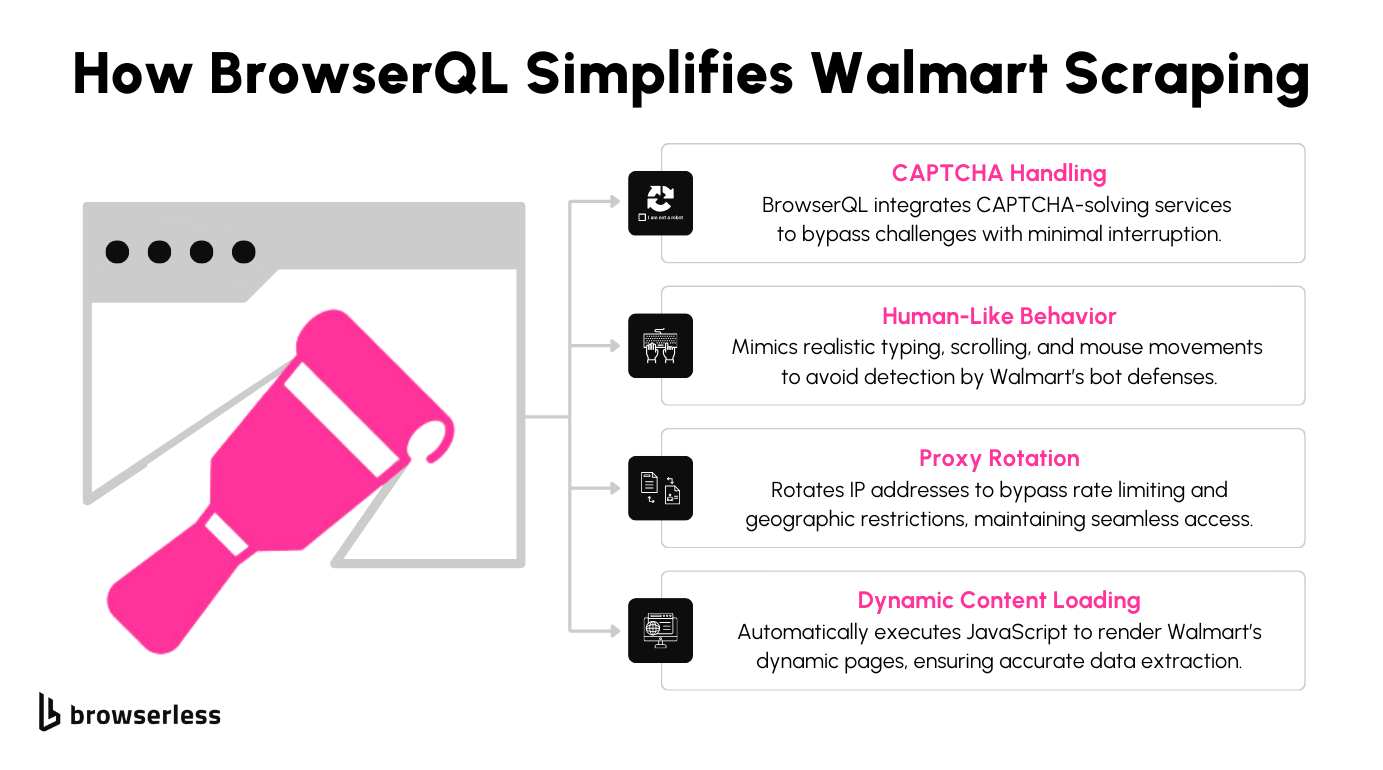

Overcoming These Limitations with BrowserQL

BrowserQL offers a way to handle these challenges effectively. It simulates human-like interactions, such as realistic scrolling and clicking patterns, making it harder for Walmart’s systems to distinguish it from a real user.

BrowserQL also integrates tools to solve CAPTCHAs, allowing the script to continue when a challenge appears. Additionally, it supports proxy management, enabling requests from IP addresses and reducing the likelihood of being blocked.

Setting Up BrowserQL for Walmart Scraping

Step 1: Create a Browserless Account and Retrieve Your API Key

The first step to using BrowserQL for Walmart scraping is signing up for a Browserless account. Once registered, log in and head over to your account dashboard. There, you’ll find a section dedicated to API keys.

Locate your unique API key and copy it. This will be used to authenticate all your requests to the BrowserQL API. The dashboard also provides insights into your usage and subscription details, so you can easily keep track of your activity and API calls.

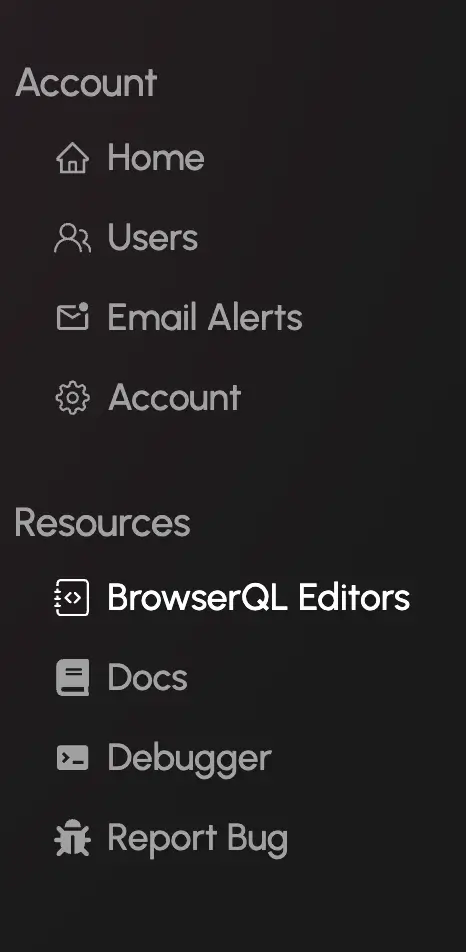

Caption: Navigate to the BrowserQL Editors section from your dashboard.

Step 2: Set Up Your Development Environment

Before writing your scripts, make sure your development environment is ready. Install Node.js, which you’ll use to run your scripts. Once Node.js is installed, open your terminal and use the following commands to install the required packages:

These libraries allow your script to send requests to the BrowserQL API and process the HTML responses you’ll receive from Walmart product pages.

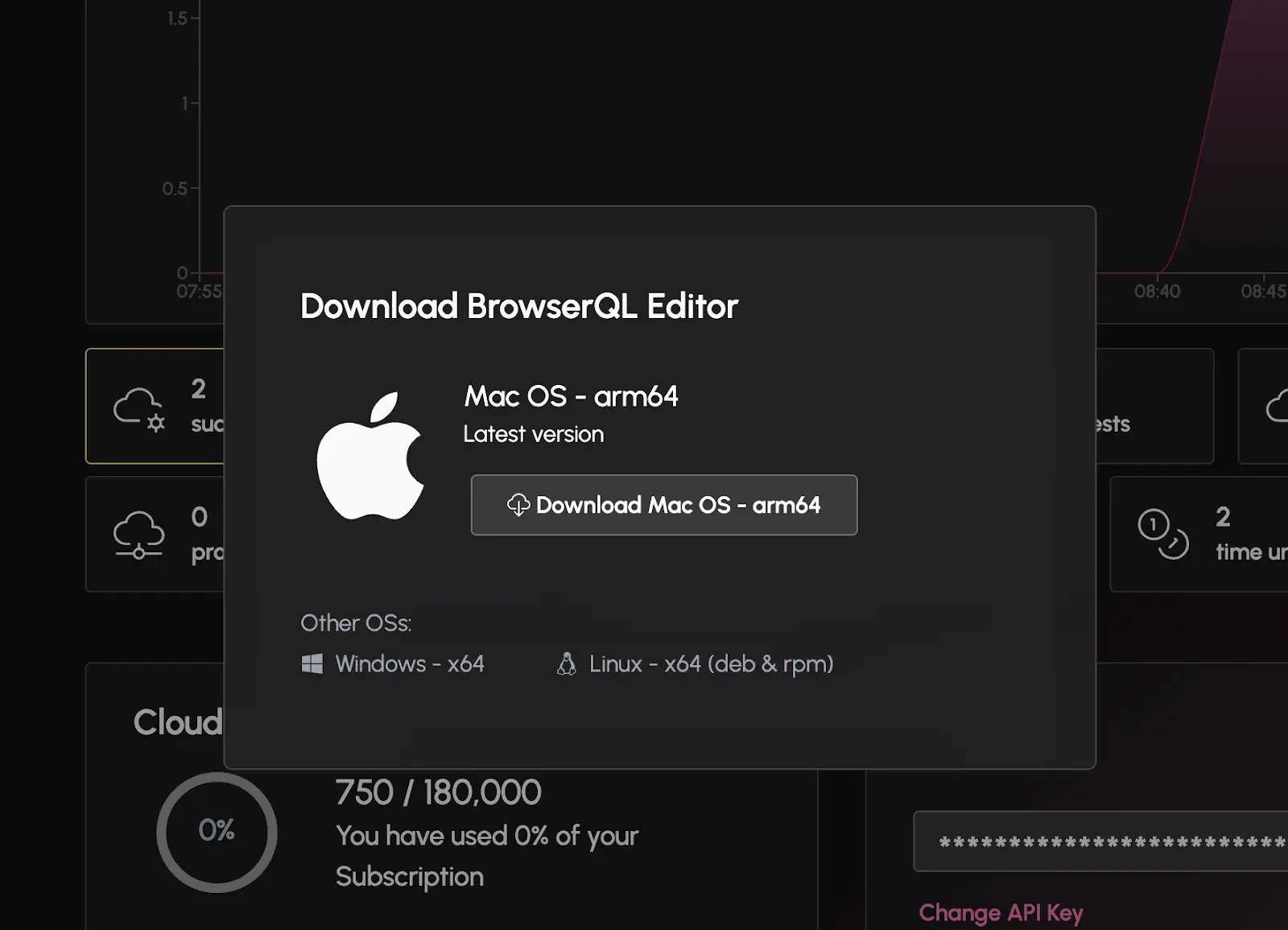

Step 3: Download and Install the BrowserQL Editor

BrowserQL offers an editor that simplifies crafting and testing queries. You can download this editor directly from your Browserless dashboard:

- Navigate to the BrowserQL Editors section in the left-hand sidebar.

- Select the version compatible with your operating system, such as macOS, Windows, or Linux.

- Click the download link and follow the installation steps.

Caption: Download the BrowserQL Editor for your operating system.

Once installed, the BrowserQL Editor provides a simple and interactive interface for testing and optimizing your scraping queries before integrating them into your Walmart scraping scripts.

Step 4: Run a Basic Test Query

Before jumping into Walmart scraping, it’s essential to test your setup to ensure everything works as expected. Here’s a sample query to test BrowserQL by loading Walmart’s homepage:

Copy and paste this query into the BrowserQL Editor and execute it. The response should return a status code and the time it took to load the page. This test confirms that your API key and setup are functioning correctly. Once your test query works, you’re ready to create more advanced scripts to scrape product data, reviews, and other details from Walmart.

Writing Our BrowserQL Script

Part 1: Collecting Product URLs from the Search Page

To gather product URLs from Walmart, we’ll begin by loading the search results page, extracting the HTML with BrowserQL, and parsing it to find product links. These links will then be stored in a CSV file for further processing.

Step 1: Import Libraries and Define Constants

Start by importing the necessary libraries and defining constants for the script. These include the BrowserQL API endpoint, an API token, and paths for the input and output files.

What’s Happening?

- BrowserQL URL: Specifies the API endpoint for executing scraping requests.

- API Token: Authorizes requests made to the BrowserQL service.

- Output File: Identifies where the extracted product URLs will be saved.

- Search URL: Represents the Walmart search page to scrape for product listings.

Step 2: Build and Execute the BrowserQL Query

Next, we’ll create a BrowserQL mutation to open the search results page, wait for the content to load, and fetch the HTML.

What’s Happening?

- GraphQL Query: Sends instructions to BrowserQL for scraping the search page.

- HTML Parsing: Extracts product links by identifying elements containing

/ip/in theirhref. - URL Formatting: Converts relative paths into absolute URLs by appending the base domain.

- CSV Output: Stores the collected product links in a structured CSV file.

Part 2: Extracting Product Details

With the product URLs saved, the next step is to visit each product page and scrape details such as the product name, price, rating, and the number of reviews.

Step 1: Define Constants and Read Input CSV

The script defines constants for the BrowserQL API and paths for the input and output CSV files. It then reads product URLs from the input CSV.

What’s Happening?

- Reading URLs: Extracts product links from the input CSV file.

- Validation: Filters out empty or invalid entries.

- Output Collection: Gathers the URLs in an array for processing.

Step 2: Fetch HTML Using BrowserQL

For each product URL, the script retrieves the HTML content using a GraphQL mutation via BrowserQL.

What’s Happening?

- GraphQL Mutation: Sends a request to BrowserQL to load the product page and retrieve its HTML content.

- Error Handling: Logs network or data retrieval issues for troubleshooting.

- HTML Retrieval: Returns the HTML content for further processing.

Step 3: Parse Product Details and Save to CSV

Using Cheerio, the script extracts specific product details from the HTML content and writes them to an output CSV file.

What’s Happening?

- Parsing Details: Extracts the product name, price, rating, and review count from the HTML.

- Data Structuring: Formats the parsed data into an array of objects.

- CSV Output: Saves the collected product details to a CSV file for further analysis.

Conclusion

BrowserQL makes scraping Walmart smoother and more efficient. It removes the headaches of bypassing CAPTCHAs, navigating dynamic content, and avoiding anti-bot systems. Whether researching products, tracking prices, or analyzing reviews, BrowserQL lets you do it all seamlessly, without interruptions. By handling even the trickiest parts of scraping e-commerce sites like Walmart, BrowserQL opens up new possibilities for gathering valuable data. Ready to simplify your data collection and scale your insights? Sign up for BrowserQL and discover what it can do for Walmart and other platforms!

FAQ

Is scraping Walmart legal?

Scraping public product data is generally permissible, but it’s important to review Walmart’s terms of service to ensure compliance and avoid abusive scraping practices that could disrupt their platform.

What can I scrape from Walmart?

You can extract product details, pricing, stock availability, customer reviews, and seller information all valuable for market research and competitive analysis.

How does BrowserQL handle Walmart’s anti-bot defenses?

BrowserQL uses human-like interactions, supports proxies, and minimizes browser fingerprints to reduce detection. This combination allows for smooth scraping even with Walmart’s defenses in place.

Can I scrape all product reviews on Walmart?

Yes, BrowserQL supports handling pagination and dynamic loading, enabling you to collect all reviews for a product without missing any data.

Does Walmart block automated scrapers?

Walmart employs strong anti-bot measures, such as CAPTCHA challenges and IP rate limiting. BrowserQL is specifically engineered to overcome these obstacles.

Can I scrape Walmart without triggering CAPTCHAs?

BrowserQL’s human-like browsing behavior significantly reduces CAPTCHA occurrences. For those rare instances where CAPTCHAs appear, BrowserQL integrates with CAPTCHA-solving services to maintain uninterrupted scraping.